Near-quantum accuracy meets real-world applicability with InstaDeep’s open-source library for Machine Learning Interatomic Potentials

Disclaimer: All claims made are supported by our research paper: Machine Learning Interatomic Potentials: library for efficient training, model development and simulation of molecular systems unless explicitly cited otherwise.

Understanding molecular behaviour allows researchers to predict the physical and chemical properties of complex systems1, such as how a protein folds or how a drug binds to its target. These insights are critical across biology, chemistry, and materials science2, especially when experiments are costly, time-consuming, or difficult to scale.

Yet molecular science has long grappled with a trade-off between computational efficiency and precision. Traditional simulation methods either favour speed at the expense of accuracy or require substantial computational cost to faithfully capture molecular behaviour3.

Historically, scientists have relied on two main methods to model molecular systems: empirical force fields and Density Functional Theory (DFT). Force fields offer speed, using simple mathematical models to approximate atomic behaviours, but often lack the accuracy needed to capture complex reactions or subtle interactions. In contrast, DFT provides high-precision predictions based on electron density, but is prohibitively resource-intensive for large systems or long simulations.

So, is it possible to simulate how atoms and molecules interact with both speed and accuracy?

Machine Learning Interatomic Potentials (MLIP) are a novel in silico approach for predicting molecular properties. These models use machine learning (ML) to estimate interatomic forces and energies, offering a compelling alternative to traditional simulation methods. MLIP methods aim to disrupt the long-standing trade-off between accuracy and efficiency, approaching the precision of quantum mechanical techniques like DFT, while reducing computational cost by several orders of magnitude.

However, many of today’s most promising MLIP approaches remain inaccessible to researchers without specialised ML expertise—a gap that our mlip library aims to close.

Why do we need mlip?

The mlip library offers a consolidated environment for working with MLIP models, delivering near-quantum accuracy with improved computational efficiency.

Designed to support the full simulation lifecycle, from data preparation and model training to simulation and prediction, it offers a curated suite of pre-trained, high-performance models that combine strong predictive capabilities with efficient inference.

💡Inference refers to the process of using a trained model to make predictions from new input data. Efficient inference is critical for practical applications like long-timescale simulations of biological systems, where computational bottlenecks can otherwise limit progress4.

Created with both usability and flexibility in mind, mlip opens up advanced molecular simulation to a much broader community. Biologists and materials scientists can simulate molecular systems without requiring deep ML expertise, while ML researchers can use the library to develop and test new models with minimal barriers.

What does mlip include?

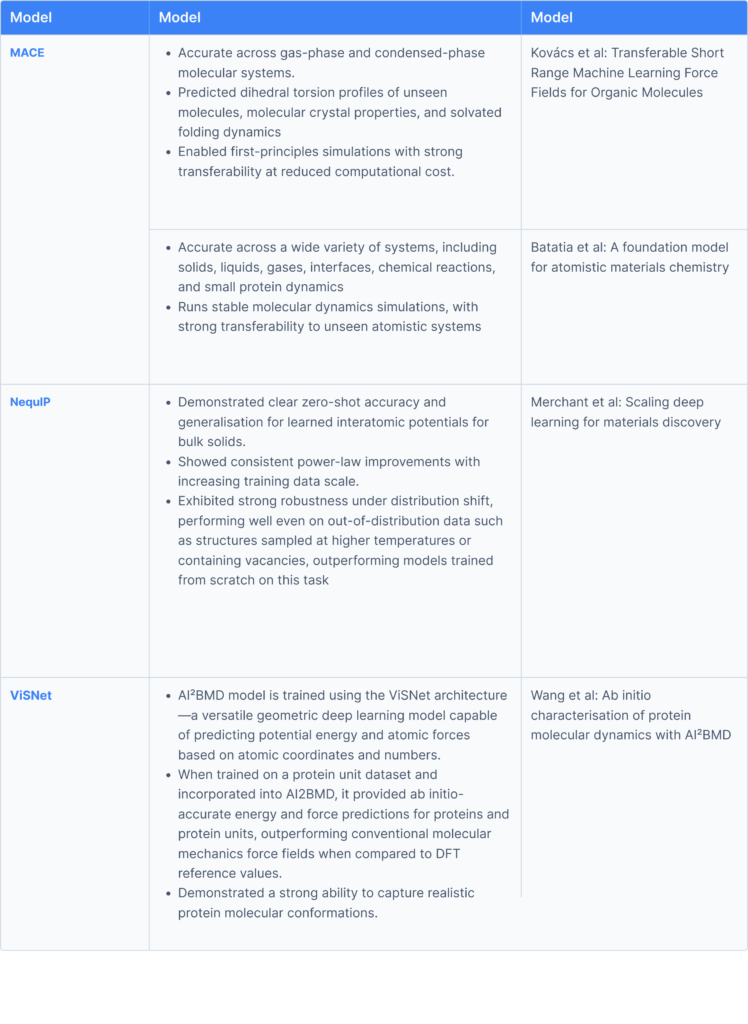

Currently, the library features three graph-based models: MACE, NequIP, and ViSNet—all extensively validated on scientific benchmarks by the wider research community for their performance, as shown in the table below.

To maximise accessibility, mlip provides pre-trained versions of each model, specifically optimised for biochemical interactions. These were trained on a refined version of the SPICE2 dataset, a chemically diverse collection of approximately two million molecular structures computed at a high level of quantum mechanical theory. After curation, the dataset was consolidated to around 1.7 million structures for training, with 88,000 reserved for validation.

To support integration into real-world simulation workflows, mlip includes two molecular dynamics (MD) wrappers: ASE (Atomic Simulation Environment) and JAX MD. These wrappers act as bridges, allowing users to apply ML-generated force fields within established MD engines, without having to rewrite their workflows.

💡 MD wrappers provide a software interface that connects ML models to molecular dynamics engines, enabling seamless simulation without manual reconfiguration.

The library is designed to support a broad user base, from domain scientists to ML practitioners, and is guided by three core principles:

- Ease of use: Installation and configuration are straightforward, even for users with limited experience in JAX or machine learning. Helpful defaults reduce friction while maintaining flexibility.

- Extensibility: mlip is a modular toolbox. Users can easily add new model architectures or preprocessing methods to suit their research needs.

- Inference efficiency: Many practical applications, especially in biology, depend on running large-scale simulations over long timescales. mlip prioritises fast, efficient inference while striving for state-of-the-art training performance.

What’s next?

Several major enhancements are planned for future iterations of mlip, including support for charged systems and new models capable of predicting charge-related properties.

Performance may also be improved through integration with faster backends—such as NVIDIA’s cuEquivariance library—and we aim to expand mlip with new foundation models trained on larger, more diverse datasets.

Importantly, mlip will remain open source. Comprehensive tutorials are available to help users customise and extend the library, and contributions from the wider research community are strongly encouraged.

Ready to simulate with near-quantum accuracy? Explore mlip on GitHub ! Download the paper to dive deeper into the science behind the library.

1 Aulifa et al: Elucidation of Molecular Interactions Between Drug–Polymer in Amorphous Solid Dispersion by a Computational Approach Using Molecular Dynamics Simulations. Advances and Applications in Bioinformatics and Chemistry. Dove Medical Press.

2 Fang et al: Role of Molecular Dynamics Simulations in Drug Discovery. MetroTech Institute.

3 Advancements and Applications of Molecular Dynamics Simulations in Scientific Research. MuseChem.

4 https://huggingface.co/docs/huggingface_hub/en/package_reference/inference_client. Hugging Face.