This research was authored by Scott Cameron at the University of Oxford and InstaDeep, in collaboration with Prof Stephen Roberts, Dr Arnu Pretorius of InstaDeep and Tyron Cameron of Discovery Insure South Africa. It is Cameron’s first publication from his PhD, which is funded by InstaDeep.

Stochastic differential equations (SDEs) have been consistently used across many fields to describe a wide range of spatio-temporal processes, as a natural extension to ordinary differential equations. More recently, SDE models have gathered interest in machine learning and current work focuses on efficient and scalable methods of inferring SDEs from data. Despite these advances, learning remains computationally expensive due to the sequential nature of SDE integrators.

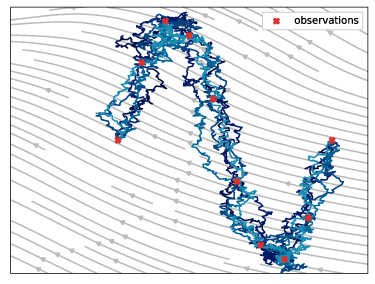

The new research – “Robust and Scalable SDE Learning: a Functional Perspective” – proposes a method that does not rely on sequential integrators; but instead uses path-space importance sampling that produces significantly lower-variance gradient estimates and has the added advantage of being highly parallelizable, facilitating the effective use of large-scale parallel hardware for massive decreases in computation time.

About Stochastic Differential Equations

SDEs are a natural extension to ordinary differential equations which allow modelling of noisy and uncertain driving forces. These models are particularly appealing due to their flexibility in expressing highly complex relationships with simple equations, while retaining a high degree of interpretability. Much of the historical work on SDEs has focussed on understanding and modelling, particularly in dynamical systems and quantitative finance, while recent efforts have addressed machine learning and achieving efficient and scalable methods of inferring SDEs from data.

The SDE Learning Problem

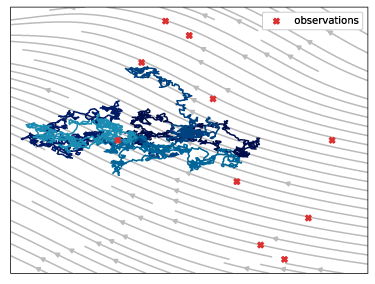

Learning SDEs from data requires repeatedly estimating a probability or expectation for a candidate SDE (e.g. based on neural networks) and then updating the model to improve its fit. This expectation is usually estimated by integrating the candidate SDE using an algorithm such as Euler–Maruyama.This approach precludes parallelised operation and also restricts scalability through multiple factors: the unacceptably wide range of gradient variance; limitations on the number of dimensions an SDE will work on; the ability of the model to learn at higher dimensions; plus the compute power needed to repeatedly process sequential algorithms.

A new path-based approach

After unsuccessfully trying to apply this type of model to specific problems, Cameron concluded that integrator algorithms were only capable of delivering mediocre results on small problems.

Looking afresh at the issue, a new approach using the mathematical theory of functional integration was employed. This approach focused on the paths/trajectories as a whole, then selecting parts of the trajectories which offered precise results. On testing, the resultant model worked – not only on small, 1-2 dimensional problems, but also on much larger (5, 10, 20, 100) multi-dimensional problems, each one performing significantly better in terms of lower gradient variances, parallelisation capabilities and reduced compute time, by removing the sequential dependence. In testing, results up to 50x faster than achieved on the standard algorithm were observed.

Reflecting on his work, Cameron said, “This research originated from the observation that changing from integrators to using a path-based, functional approach would allow us to create an algorithm that didn’t call for sequential dependence, further enabling us to easily scale, add multi-dimensions and utilise parallelisation without degrading the results.

“The breakthrough is significant, enabling SDE research at scales was not possible before and opens up numerous exciting avenues for future research. Scott is an exceptional researcher. This was clear even from his internship at InstaDeep working with the Cape Town team in early 2020, which culminated in co-authoring an accepted paper at NeurIPS, and now with a first-author publication at ICLR since starting his PhD. He is a clear example of the talent we have coming from South Africa. It is extremely exciting seeing these successes from top students” said Arnu Pretorius, Research Scientist and team lead in Cape Town.

Future applications and collaboration

This research represents a breakthrough in the field and opens up many other exciting avenues for future research across quantitative finance and actuarial work; as well as Physics-based topics like Brownian motion/fluid dynamics, molecular dynamics and more.

Whilst the research is largely theoretical, employing small “proof of concept” testing, other real-life applications are being considered as a continuation of Cameron’s PhD research, alongside extensions into graph-based models and more.

The full research paper is available on Arxiv.

Continuing success at ICLR

Following rigorous vetting and blind review, the research has been accepted as a conference paper at ICLR 2022. Acceptance to ICLR is strictly on merit, and is the second year running that InstaDeep has had papers accepted, reaffirming the company’s record of boundary-pushing research initiatives.

About ICLR

The International Conference on Learning Representations (ICLR) is the premier gathering of professionals dedicated to the advancement of the branch of artificial intelligence called representation learning, but generally referred to as deep learning.

The 2022 event will run as a virtual conference between 25-29 April. Tickets for the event, as well as the full schedule of talks and workshops, as well as oral and poster presentations of selected papers are available on the ICLR website.

ICLR is globally renowned for presenting and publishing cutting-edge research on all aspects of deep learning used in the fields of artificial intelligence, statistics and data science, as well as important application areas such as machine vision, computational biology, speech recognition, text understanding, gaming, and robotics.

Participants at ICLR span a wide range of backgrounds, from academic and industrial researchers, to entrepreneurs and engineers, to graduate students and postdocs.